AI-Powered Postgres index suggestions

By Rafer Hazen |

Today we're releasing a new feature in PlanetScale Insights: AI-powered index suggestions for PostgreSQL databases. This feature monitors your database workload and periodically suggests new indexes to improve query execution speed and overall database performance. This blog post describes how we used LLMs (large language models) combined with robust validation to generate high quality index suggestions.

Overview

Finding the right indexes for your database is one of the most common and critical operations in maintaining database health. It's also something that needs to be done not just when tables are created, but consistently revisited as an application evolves and query patterns change.

Our experience using LLM chat tools made it clear that LLMs are capable of finding indexes to improve query performance. Given the right set of queries to improve, LLMs frequently find an optimal index. There were two issues we needed to solve, though.

First, LLMs are not always great at deciding when something needs to be modified. Given a problem, LLMs are great at finding an answer but can't always be trusted to decide if maybe nothing needs to change at all. We are well positioned to solve this issue by using the query performance data Insights collects to only ask for index recommendations after first verifying that there are queries present that are likely to need an index.

Second, sometimes LLMs produce inaccurate results. As anyone who's used LLM tools for software development can tell you, it's crucially important to validate LLM generated solutions before shipping them to production. Before we suggest that a customer make changes to their production database, we need to make sure that our suggestions will actually have the desired effect. To accomplish this we measure the estimated performance of the relevant queries with and without each suggested index, and only show suggestions that result in a substantial improvement.

Our goal with Postgres index suggestions is not just to save you the trouble of asking LLMs for suggestions on your own, but to use the unique data and capabilities available in Insights to produce the best overall results.

Asking the right question

Two things determine the right set of indexes for a given database:

- The database schema

- The workload (i.e. what queries are being run)

LLMs, like humans, produce better answers if they are asked better questions. Our first task, then, was to filter down the list of query patterns to those that were most likely to actually benefit from an index. When we manually examine a database to find queries that might benefit from an index, we typically look for query patterns with a high ratio of rows read to rows returned. If a query is reading a much larger set of rows than it is returning, it's an indication that an index (or a more selective index) could improve response times.

We also filter the set of query patterns to those are using significant resources. In particular we require that the query pattern is responsible for at least 0.1% of the aggregated runtime of all queries, and that it has been run a minimum number of times. Since indexes incur storage, memory and write overhead, we want to avoid suggesting indexes for ad-hoc or infrequently run queries.

Once we have a set of candidate queries, we filter the schema down to the tables referenced by the query. This keeps the prompt smaller and more focused.

The final component of our prompt is that we ask the LLM to include a reference to the queries that each new index is designed to improve. This data is used in the validation step.

Validation

Now that we have a list of candidate indexes, we can perform the most crucial step: validation. Asking users to create indexes on their production database is an inherently high-stakes activity, and we want to be certain about the quality of the underlying suggestions.

To this end, we perform 2 validation steps:

- Parse the generated

CREATE INDEXstatements to ensure that they are syntactically valid and of the correct form. - Evaluate each candidate query with and without the related index, and ignore any index suggestion that doesn't improve at least one candidate query.

To evaluate the effect of each index, we use the HypoPG extension. HypoPG lets us create hypothetical indexes that do not actually exist (and therefore have no overhead) but which the planner can use in the context of EXPLAIN commands. This allows us to find an estimated cost using the actual Postgres planner, and determine if the predicted cost improvement is substantial enough to justify recommending a new index.

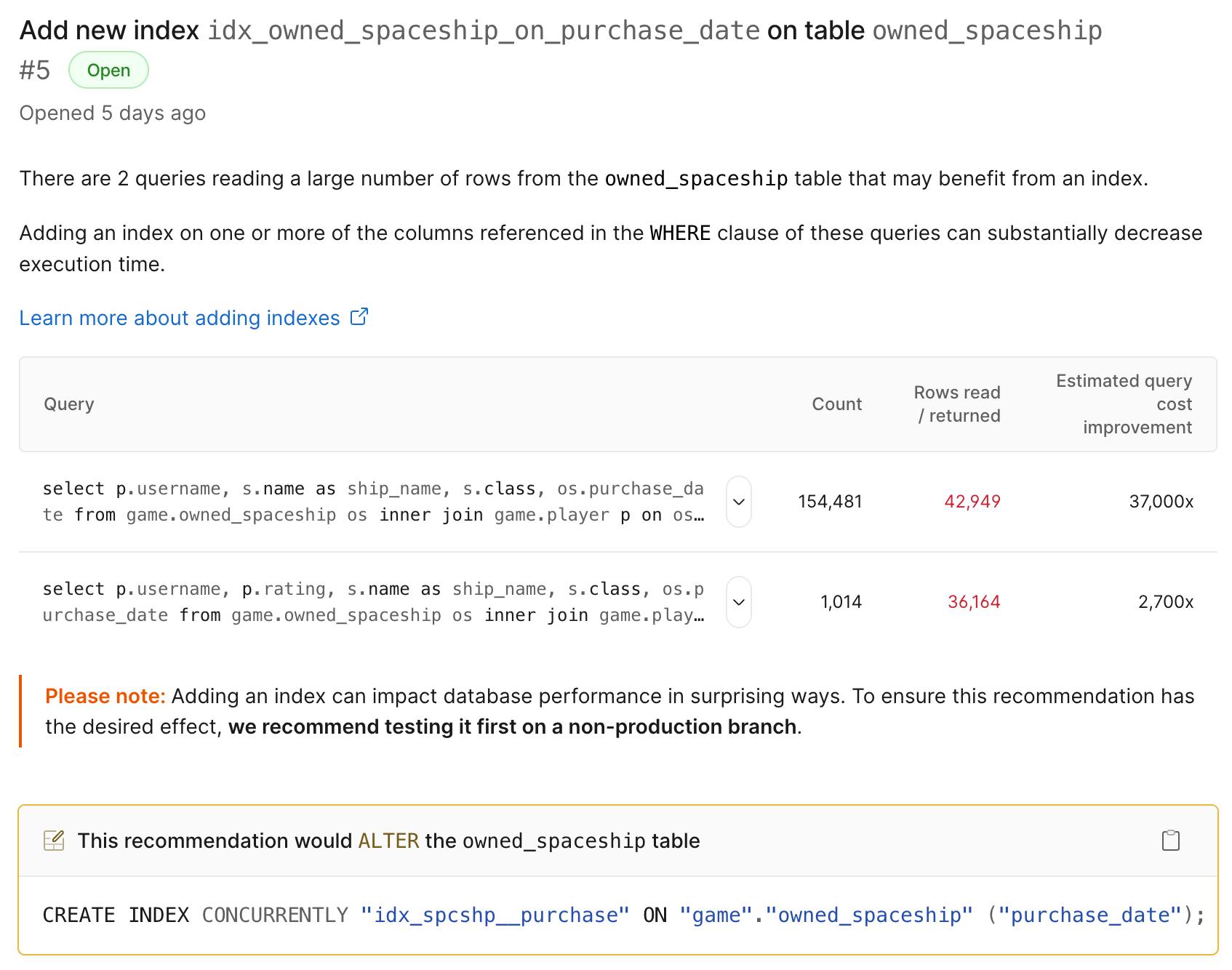

If an index suggestion passes both phases of validation, Insights generates a new index recommendation. Insights shows index suggestions with a table of the queries they are designed to improve, including the estimated reduction in query cost.