Performant database tree traversal with Rails

By Mike Coutermarsh |

We recently solved an interesting performance problem in our Rails API when performing a tree traversal.

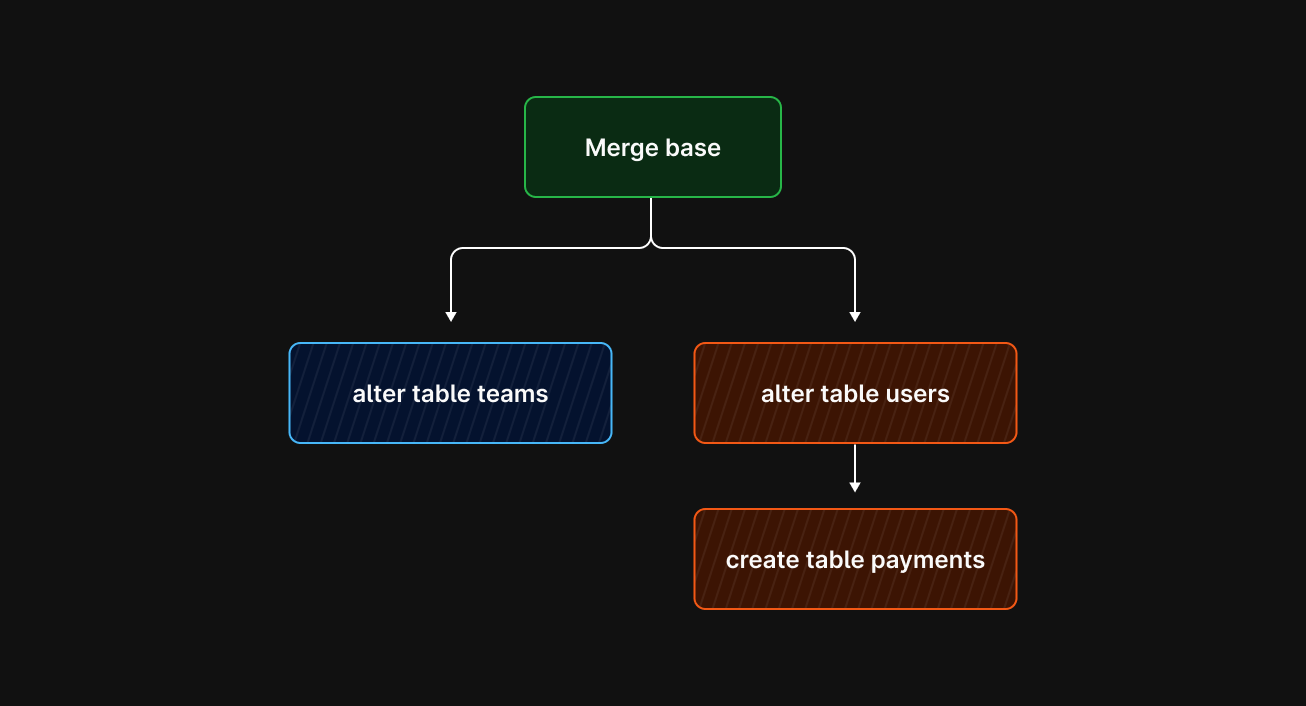

We have some code that figures out a 3-way merge for your database schemas. To do this, we need to calculate a merge base between two schemas (like git!).

Our Rails API keeps track of changes to each PlanetScale database schema. We call each of these changes a "schema snapshot," similar to a git commit that stores the state of the schema at a specific time. Each snapshot can have one or two parents. When merging branches, we perform a breadth-first search on the history of each change until we find the common ancestor between both branches. This is the merge base.

Going one-by-one is slow

Finding the merge base involves checking each snapshot in the history of both branches.

Usually, this is fast because most databases only have a few changes.

Others, though, would have thousands. This is where we'd run into performance issues. Since we were doing a database query for every step as we traversed the tree. Even though each query was fast, the network time alone added up quickly.

select * from schema_snapshots where id = 20 select * from schema_snapshots where id = 21 select * from schema_snapshots where id = 22 select * from schema_snapshots where id = 23 select * from schema_snapshots where id = 24 // *thousands more queries*

Solving the N+1 problem

This is a classic "N+1" performance problem. For each step in the process, we trigger another query.

Usually, fixing these in Rails is quite simple. There is an includes method for preloading the data we need. Unfortunately, in this case, due to the data structure, the normal preloading techniques do not work. We had to invent something new.

In-memory cache

First, we started by creating an in-memory cache for storing the snapshots. This is a temporary cache that only exists to find the merge base. In-memory is important here because our primary performance issue was caused by the sheer number of network requests.

class SnapshotMergeBaseCache

attr_reader :store, :hits, :misses

def initialize

@store = {}

@misses = 0

@hits = 0

end

def get(id)

if @store[id]

@hits += 1

return @store[id]

end

@misses += 1

add(SchemaSnapshot.find(id))

end

def add(node)

@store[node.id] = node

end

end

This gives us a place to store all of our snapshots in memory. It uses a simple hash where the id is the key, and the value is the snapshot data.

The add method puts a snapshot into the cache. The get method is for retrieving it. The get method also keeps stats on the hits/misses. We used these stats to understand how well it worked once in production.

Preloading the cache

Now that we have the cache, the next step is bulk-loading it with snapshots. Conveniently, the snapshot history is pretty predictable when finding the merge base. We could preload the X most recent snapshots for each branch and drastically reduce the number of trips back to the database.

cache = SnapshotMergeBaseCache.new from_branch.recent_snapshots.limit(FROM_PRELOAD_COUNT).each do |snap| cache.add(snap) end into_branch.recent_snapshots.limit(INTO_PRELOAD_COUNT).each do |snap| cache.add(snap) end

Now, when running our breadth-first search, we can use cache.get(id) to find the next node. It hits the cache in most cases, avoiding the network request and solving our performance problem.

Rolling out & testing

Making changes like this can be tricky. There is often a wide gap between what you expect to happen and the reality of production.

First, we needed to ensure that it was accurate. We ran a few tests where we calculated the merge base using both the old and new methods for thousands of databases. This made us confident the new code was returning the correct results.

We then used feature flags to test rolling out the new code path and recorded data on how it performed. The hits and misses data proved useful for fine-tuning the number of snapshots we preloaded. After a couple iterations, we released it to 100% of our customers.

Alternative solutions

Adding an in-memory cache is just one way of solving this problem. This worked out best for us due to the high number of snapshots we needed to traverse for some databases. It was also simple to layer this solution onto our existing code without many major changes. This reduced the risk when rolling it out.

Database recursive CTE

One option for solving this is letting the database do the work. This can be accomplished with a recursive common table expression. With this, the database could follow the pointer to each record until it finds the common merge base.

Materialized path

The materialized path technique is a way to represent a graph in a SQL database. It stores the relationship history in a single column, such as 20/19/15/10/5/3/1. By doing this, you can then look at the history of two nodes and find their common parent.

This is a great option that works well for tree structures with a known limit. In our case, storing thousands of relationships didn't make this feasible.